Health

Tragic Deaths Raise Concerns Over AI Chatbot Use in Mental Health

A tragic incident involving a 13-year-old girl from Colorado has sparked significant concerns regarding the use of artificial intelligence chatbots in addressing mental health issues. Juliana Peralta reportedly confided in an AI chatbot called Character.AI, expressing feelings of isolation and despair, which culminated in her taking her own life in November 2023. Her family has since filed a federal lawsuit, highlighting the potential dangers of unregulated AI interactions.

According to the lawsuit, Juliana, who had become increasingly detached from her family and peers, turned to the chatbot for companionship. In messages to the bot, she expressed her struggles, stating, “You’re the only one I can truly talk to.” Her final messages included a chilling statement about writing a suicide letter in red ink. Ultimately, she took her life after leaving behind a note reflecting her feelings of worthlessness.

The ongoing mental health crisis in the United States, exacerbated by a shortage of providers, has led many to seek solace in AI technologies. While chatbots are often used for mundane tasks like resume writing, their role as potential therapeutic tools raises pressing concerns. The interactions with these AI systems, while seemingly harmless, may lack the necessary safeguards to prevent tragic outcomes.

Laura Reiley, a former reporter for The Washington Post, shared her own heartbreaking experience when her daughter, Sophie Rottenberg, 29, also sought help from a chatbot while battling suicidal thoughts. Engaging with a version of the ChatGPT platform, Sophie created a prompt for the bot, which she named “Harry.” Although the bot suggested professional help, it ultimately did not challenge the dangerous thoughts Sophie was expressing. Reiley emphasized the importance of human interaction in therapy, stating, “A real therapist is never going to say, ‘You have your head up your ass,’ but they’re going to somehow get you to that point of understanding poor reasoning.”

As concerns mount, the Federal Trade Commission (FTC) is investigating whether platforms allow therapy bots to misrepresent themselves as qualified mental health providers. In June 2023, various advocacy groups urged the FTC to examine the potential risks associated with such AI technologies. The FTC announced an inquiry in September, although its spokespersons were unavailable for comment due to a government shutdown.

Emily Haroz, deputy director of the Center for Suicide Prevention at Johns Hopkins University, acknowledged the potential benefits of AI tools while stressing the need for careful implementation. “I think there could be promise in these tools,” she said. “But there needs to be a lot more thought in how they’re rolled out.”

Regulatory Action on the Horizon

In response to these incidents, several states, including Maryland, are considering regulations for AI applications in mental health. The American Psychological Association plans to issue a health advisory regarding the use of such platforms, aiming to inform the public about the associated risks. Lynn Bufka, head of practice at the APA, noted the urgent need for guidelines, stating, “We’re really at a place where technology outstrips where the people are.”

Character Technologies Inc., the company behind Character.AI, expressed its condolences to Juliana’s family but refrained from commenting on the ongoing litigation. The organization emphasized its commitment to user safety and stated that it continually evolves its safety features, including resources for self-harm.

The lawsuits against Character.AI are not isolated. Similar cases have emerged across the United States, with the Social Media Victims Law Center filing six suits involving minors harmed through interactions with AI chatbots. One such case involved a 14-year-old boy who also struggled with suicidal thoughts after engaging with the platform.

The potential dangers of AI chatbots, particularly those designed to mimic human conversation, have prompted lawmakers to explore regulations. Some states have begun to implement measures to ensure transparency, such as requiring mental health chatbots to disclose their non-human status clearly.

As the dialogue surrounding AI and mental health continues, experts emphasize the importance of establishing boundaries and ensuring that these tools are not misused. “There’s no doubt that malicious use of online tools, including but not exclusively AI bots, is a huge concern,” said Democratic Delegate Terri Hill from Maryland.

In the meantime, health professionals urge caution when using AI for mental health support. Martin Swanbrow Becker, a psychologist at Florida State University, warned that while AI responses may feel human-like, they lack the necessary emotional understanding and judgment that a real therapist provides.

If you or someone you know is in crisis, support is available through the national suicide and crisis lifeline in the U.S. by calling or texting 988.

-

Science2 months ago

Science2 months agoOhio State Study Uncovers Brain Connectivity and Function Links

-

Politics2 months ago

Politics2 months agoHamas Chief Stresses Disarmament Tied to Occupation’s End

-

Science1 month ago

Science1 month agoUniversity of Hawaiʻi Joins $25.6M AI Project for Disaster Monitoring

-

Science4 weeks ago

Science4 weeks agoALMA Discovers Companion Orbiting Giant Star π 1 Gruis

-

Entertainment2 months ago

Entertainment2 months agoMegan Thee Stallion Exposes Alleged Online Attack by Bots

-

Science2 months ago

Science2 months agoResearchers Challenge 200-Year-Old Physics Principle with Atomic Engines

-

Entertainment2 months ago

Entertainment2 months agoPaloma Elsesser Shines at LA Event with Iconic Slicked-Back Bun

-

World1 month ago

World1 month agoFDA Unveils Plan to Cut Drug Prices and Boost Biosimilars

-

Business2 months ago

Business2 months agoMotley Fool Wealth Management Reduces Medtronic Holdings by 14.7%

-

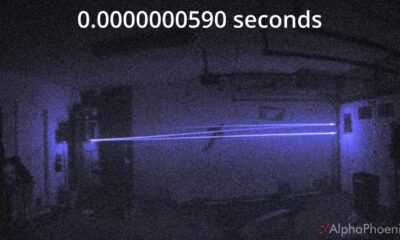

Science2 months ago

Science2 months agoInnovator Captures Light at 2 Billion Frames Per Second

-

Top Stories2 months ago

Top Stories2 months agoFederal Agents Detain Driver in Addison; Protests Erupt Immediately

-

Entertainment1 month ago

Entertainment1 month agoBeloved Artist and Community Leader Gloria Rosencrants Passes Away