World

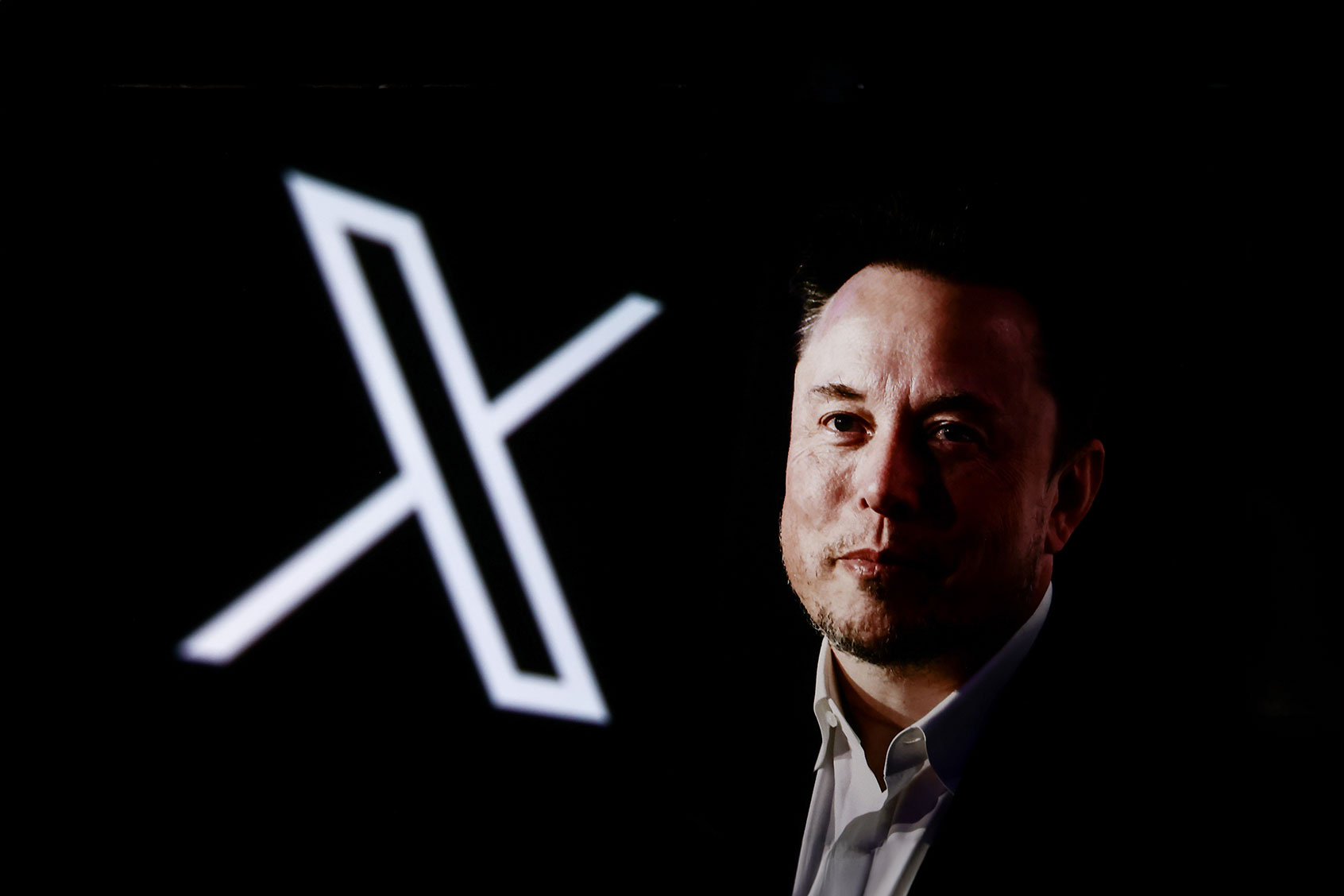

Social Media Faces Scrutiny as Elon Musk Reshapes X’s Policies

Recent developments in social media governance have sparked significant debate, particularly following changes implemented by Elon Musk after his acquisition of Twitter, now rebranded as X. Many observers believe that the platform’s new direction has led to an escalation in the spread of misinformation, raising concerns about the impact on political discourse in the United States.

The origins of these challenges can be traced back to 2014, when Buzzfeed News highlighted the activities of the Internet Research Agency, a Russian organization that orchestrated a coordinated propaganda effort across various social media platforms. This campaign involved creating fake accounts and manipulating discussions to influence American politics. Despite nearly two decades of awareness about such foreign interference, the U.S. continues to grapple with the complexities of regulating these threats.

Upon purchasing Twitter in 2022, Musk reinstated several accounts that had been banned for promoting hate speech and disinformation, including that of former President Donald Trump. A 2024 CNN analysis of 56 pro-Trump accounts on X identified a “systematic pattern of inauthentic behavior,” with some accounts even displaying blue check marks that indicate official verification. Alarmingly, eight of these accounts utilized stolen images of European influencers to bolster their credibility.

Musk’s leadership at X has seen the dismantling of key teams and mechanisms that previously aimed to mitigate the spread of false information. Under his stewardship, the platform’s monetization strategy has increasingly focused on engagement, often driven by divisive cultural issues. This approach has created a feedback loop where outrage is used to foster consent for policy changes, particularly during periods of internal conflict within political factions.

In October 2023, as tensions rose among Trump’s supporters, Nikita Bier, X’s head of product, proposed a new feature aimed at revealing the geographical locations of users. The urgency for action escalated after Katie Pavlich of Fox News publicly urged Musk to address the issue of foreign bots that she claimed were “tearing America apart.” Bier responded, promising to implement changes within 72 hours.

The rollout of this feature was chaotic and marred by errors, yet it received praise from several conservative figures, including Florida’s Governor Ron DeSantis and right-wing podcaster Dave Rubin, the latter having been confirmed by the Justice Department as a subcontractor of Russian intelligence. Investigations revealed that numerous prominent MAGA accounts were operating from Nigeria, raising further concerns about the authenticity of online political engagement.

This situation highlights the potential dangers posed by the new location-exposing feature, particularly for journalists working in authoritarian regimes. Many users are aware that impersonating right-wing activists can yield higher engagement, thereby gaming the system. Bier acknowledged that X’s data was not entirely reliable, prompting a temporary withdrawal of the feature amid user warnings about the risks associated with revealing personal information without proper safeguards.

The implications of these developments extend beyond political discourse. A decade ago, political reporters were among the most active users on Twitter, using the platform for breaking news and commentary. However, as the integrity of the platform has diminished, the risks to journalists have increased, particularly in light of the rise of inauthentic accounts.

Concerns about safety are not exclusive to X. Testimony from Vaishnavi Jayakumar, former head of safety and well-being at Instagram, revealed that the platform employed a “17x” strike policy for accounts involved in human trafficking. This meant that accounts could violate the platform’s rules multiple times before facing suspension. Furthermore, internal studies by Meta, Instagram’s parent company, allegedly indicated a link between social media use and negative mental health outcomes, yet the company reportedly chose to prioritize revenue over user safety.

The current landscape of social media underscores the challenges inherent in maintaining user safety while fostering engagement. Rage-inducing content, whether generated domestically or internationally, remains difficult to control. Though algorithms could provide a solution, the prevailing business model incentivizes growth and engagement at the expense of transparency and accountability.

As discussions around these issues continue, it is evident that mere calls for improved content moderation or transparency are insufficient. What is urgently needed is systemic regulatory reform that addresses the underlying issues within social media platforms. The industry must confront the reality that it has built systems designed for profit rather than public welfare.

-

Science1 month ago

Science1 month agoOhio State Study Uncovers Brain Connectivity and Function Links

-

Politics1 month ago

Politics1 month agoHamas Chief Stresses Disarmament Tied to Occupation’s End

-

Entertainment1 month ago

Entertainment1 month agoMegan Thee Stallion Exposes Alleged Online Attack by Bots

-

Science4 weeks ago

Science4 weeks agoUniversity of Hawaiʻi Joins $25.6M AI Project for Disaster Monitoring

-

Science2 months ago

Science2 months agoResearchers Challenge 200-Year-Old Physics Principle with Atomic Engines

-

Entertainment1 month ago

Entertainment1 month agoPaloma Elsesser Shines at LA Event with Iconic Slicked-Back Bun

-

World1 month ago

World1 month agoFDA Unveils Plan to Cut Drug Prices and Boost Biosimilars

-

Top Stories1 month ago

Top Stories1 month agoFederal Agents Detain Driver in Addison; Protests Erupt Immediately

-

Business1 month ago

Business1 month agoMotley Fool Wealth Management Reduces Medtronic Holdings by 14.7%

-

Entertainment1 month ago

Entertainment1 month agoBeloved Artist and Community Leader Gloria Rosencrants Passes Away

-

Politics2 months ago

Politics2 months agoNHP Foundation Secures Land for 158 Affordable Apartments in Denver

-

Science2 weeks ago

Science2 weeks agoALMA Discovers Companion Orbiting Giant Star π 1 Gruis